HADOOP

The most well known technology used for Big Data is Hadoop. It is used by Yahoo, eBay, LinkedIn and Facebook. It has been inspired from Google publications on MapReduce, GoogleFS and BigTable. As Hadoop can be hosted on commodity hardware (usually Intel PC on Linux with one or 2 CPU and a few TB on HDD, without any RAID replication technology), it allows them to store huge quantity of data (petabytes or even more) at very low cost (compared to SAN bay systems).

Hadoop is an open source suite, under an apache foundation: http://hadoop.apache.org/.

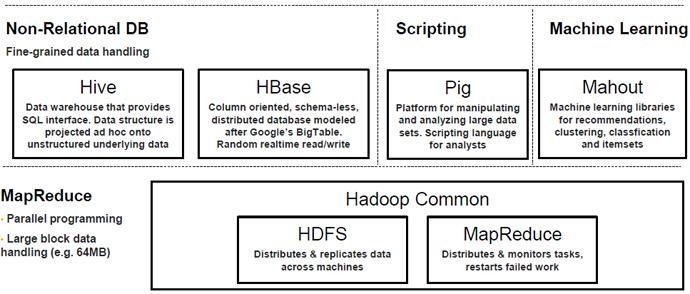

The Hadoop “brand” contains many different tools. Two of them are core parts of Hadoop:

- Hadoop Distributed File System (HDFS) is a virtual file system that looks like any other file system except than when you move a file on HDFS, this file is split into many small files, each of those files is replicated and stored on (usually, may be customized) 3 servers for fault tolerance constraints.

- Hadoop MapReduce is a way to split every request into smaller requests which are sent to many small servers, allowing a truly scalable use of CPU power (describing MapReduce would worth a dedicated post).

- HBase is inspired from Google’s BigTable. HBase is a non-relational, scalable, and fault-tolerant database that is layered on top of HDFS. HBase is written in Java. Each row is identified by a key and consists of an arbitrary number of columns that can be grouped into column families.

- ZooKeeper is a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services. Zookeeper is used by HBase, and can be used by MapReduce programs.

- Solr / Lucene as search engine. This query engine library has been developed by Apache for more than 10 years.

- Languages. Two languages are identified as original Hadoop languages: PIG and Hive. For instance, you can use them to develop MapReduce processes at a higher level than MapReduce procedures. Other languages may be used, like C, Java or JAQL. Through JDBC or ODBC connectors (or directly in the languages) SQL can be used too.

Even if the most known Hadoop suite is provided by a very specialized actor named Cloudera (also by MapR, HortonWorks, and of course Apache), big vendors are positioning themselves on this technology:

- IBM has got BigInsights (Cloudera distribution plus their own custom version of Hadoop called GPFS) and has recently acquired many niche actors in the analytical and big data market (like Platform Computing which has got a product enhancing the capabilities and performance of MapReduce)

- Oracle has launched BigData machine. Also based on Cloudera, this server is dedicated to storage and usage of non-structured content (as structured content stays on Exadata)

- Informatica has a tool called HParser to complete PowerCenter This tool is built to launch Informatica process in a MapReduce mode, distributed on the Hadoop servers.

- Microsoft has got a dedicated Hadoop version supported by Apache for Microsoft Windows and for Azure, their cloud solution, and a big native integration with SQL Server 2012.

- Some very large database solutions like EMC Greenplum (partnering with MapR), HP Vertica (partnering with Cloudera), Teradata Aster Data (partnering with HortonWorks) or SAP Sybase IQ are able to connect directly to HDFS.

What is a “Hadoop”? Explaining Big Data to the C-Suite

Keep hearing about Big Data and Hadoop? Having a hard

time explaining what is behind the curtain?

Keep hearing about Big Data and Hadoop? Having a hard

time explaining what is behind the curtain?The term “big data” comes from computational sciences to describe scenarios where the volume of the data outstrips the tools to store it or process it. So when researchers have to run analysis on massive data sets, they leverage algorithms to separate the signal from the noisy data.

As huge data sets invaded the corporate world there is new set of tools to help process big data. Hadoop is an emerging framework for Web 2.0 and enterprise businesses who are dealing with data deluge challenges – store, process, index, and analyze large amounts of data as part of their business requirements.

So what’s the big deal? The first phase of e-commerce was primarily about cost and enabling transactions. So everyone got really good at this. Then we saw differentiation around convenience… fulfillment excellence (e.g., Amazon Prime) , or relevant recommendations (if you bought this and then you may like this – next best offer).

Then the game shifted as new data mashups became possible based on… seeing who is talking to who in your social network, seeing who you are transacting with via credit-card data, looking at what you are visiting via clickstreams, influenced by ad clickthru, ability to leverage where you are standing via mobile GPS location data and so on.

The differentiation is shifting to turning volumes of data into useful insights to sell more effectively. For instance, E-bay apparently has 9 petabytes of data in their Hadoop and Teradata cluster. With 97 million active buyers and sellers they have 2 Billion page view and 75 billion database calls each day. E-bay like others is racing to put in the analytics infrastructure to (1) collect real-time data; (2) process data as it flows; (3) explore and visualize.

The continuous challenge in Web 2.0 is how to improve site relevance, performance, understand user behavior, and predictive insight to influence decisions.

This is a never ending arms race as each firm tries to become the portal of choice in a fast changing world. Industries - Travel, Retail, Financial Services, Digital Media, Search etc. – that are consumer oriented are all facing similar real-time information dynamics.

Take for instance, the competitive world of travel – airline, hotel, car rental, vacation rental etc.. Every site has to improve at analytics and machine learning as the contextual data is changing by the second – inventory, pricing, customer comments, peer recommendations, political/economic hotspots, natural disasters like earthquakes etc. Without a sophisticated real-time analytics playbook, sites can become less relevant very quickly.

Hadoop has rapidly emerged as a viable platform for Big Data analytics. Many experts believe Hadoop will subsume many of the data warehousing tasks presently done by traditional relational systems. This will be a huge shift in how IT apps are engineered.

Hadoop Quick Overview

So, What is Apache Hadoop ? A scalable fault-tolerant distributed system for data storage and processing (open source under the Apache license).Core Hadoop has two main systems:

- Hadoop Distributed File System (HDFS): self-healing high-bandwidth clustered storage.

- MapReduce: distributed fault-tolerant resource management and scheduling coupled with a scalable data programming abstraction.

- Flexibility – Store any data, Run any analysis.

- Scalability – Start at 1TB/3-nodes grow to petabytes/1000s of nodes.

- Economics – Cost per TB at a fraction of traditional options.

Traditional relational databases and data warehouse products excel at OLAP and OLTP workloads over structured data. These form the underpinnings of most IT applications. Use relational databases when dealing with (1) Interactive OLAP Analytics; (2) Multistep ACID Transactions and (3) 100% SQL Compliance.

It is becoming increasingly more difficult for classic

techniques to support the wide range of use cases and workloads that power the

next wave of digital business.

It is becoming increasingly more difficult for classic

techniques to support the wide range of use cases and workloads that power the

next wave of digital business.Hadoop is designed to solve a different problem: the fast, reliable analysis of both structured, unstructured and complex data.

Hadoop and related software are designed for 3V’s: (1) Volume – Commodity hardware and open source software lowers cost and increases capacity; (2) Velocity – Data ingest speed aided by append-only and schema-on-read design; and (3) Variety – Multiple tools to structure, process, and access data.

As a result, many IT Engineering teams are deploying the Hadoop ecosystem alongside their legacy IT applications, which allows them to combine old data and new data sets in powerful new ways. It also allows them to offload analysis from the data warehouse (and in some cases, pre-process before putting in the data warehouse).

Technically, Hadoop, a Java based framework, consists of two elements: reliable very large, low-cost data storage using the Hadoop Distributed File System (HDFS) and high-performance parallel/distributed data processing framework called MapReduce.

HDFS is self-healing high-bandwidth clustered storage. Map-Reduce is essentially fault tolerant distributed computing. For more see our primer on Big Data, Hadoop and in-memory Analytics.

Scenarios for Using Hadoop

When a user types a query, it isn’t practical to exhaustively scan millions of items. Instead it makes sense to create an index and use it to rank items and find the best matches. Hadoop provides a distributed indexing capability.Hadoop runs on a collection/cluster of commodity, shared-nothing x86 servers. You can add or remove servers in a Hadoop cluster (sizes from 50, 100 to even 2000+ nodes) at will; the system detects and compensates for hardware or system problems on any server. Hadoop is self-healing and fault tolerant. It can deliver data — and can run large-scale, high-performance processing batch jobs — in spite of system changes or failures.

Three distinct scenarios for Hadoop are:

1) Hadoop as an ETL and Filtering Platform – One of the biggest challenges with high volume data sources is extracting valuable signal from lot of noise. Loading large, raw data into a MapReduce platform for initial processing is a good way to go. Hadoop platforms can read in the raw data, apply appropriate filters and logic, and output a structured summary or refined data set. This output (e.g., hourly index refreshes) can be further analyzed or serve as an input to a more traditional analytic environment like SAS. Typically a small % of a raw data feed is required for any business problem. Hadoop becomes a great tool for extracting these pieces.

2) Hadoop as an exploration engine – Once the data is in the MapReduce cluster, using tools to analyze data where it sits makes sense. As the refined output is in a Hadoop cluster, new data can be added to the existing pile without having to re-index all over again. In other words, new data can be added to existing data summaries. Once the data is distilled, it can be loaded into corporate systems so users have wider access to it.

3) Hadoop as an Archive. Most of the historical data doesn’t need to be accessed and kept in a SAN environment. This historical data is usually archived by tape or disk to secondary storage or sent offsite. When this data is needed for analysis, it’s painful and costly to retrieve it and load it back up… so most people don’t bother using historical data for their analytics. With cheap storage in a distributed cluster, lot’s of data can be kept “active” for continuous analysis. Hadoop is efficient…it allows better utilization of hardware by allowing the generation of different index types in one cluster.

The Hadoop Stack

It’s important to differentiate Hadoop from the Hadoop stack. Firms like Cloudera sell a set of capabilities around Hadoop called the Cloudera’s Distribution for Hadoop (CDH).This is a set of projects and management tools designed to lower the cost and complexity of administration and production support services; this includes 24/7 problem resolution support, consultative support, and support for certified integrations.

The 2011 version of CDH looked like this:

The 2012 version of CDH (Apache) looks like this:

The introduction of Hadoop stack is changing the business intelligence (reporting/analytics/data mining), which has been dominated by very expensive relational databases and data warehouse appliance products.

What is Hadoop good for?

Searching, log processing, recommendation systems, data warehousing, video and image analysis, archiving seem to be the initial uses. One prominent space where Hadoop is playing a big role in is data-driven online websites. The four primary areas include:1) To aggregate “data exhaust” — messages, posts, blog entries, photos, video clips, maps, web graph….

2) To give data context — friends networks, social graphs, recommendations, collaborative filtering….

3) To keep apps running — web logs, system logs, system metrics, database query logs….

4) To deliver novel mashup services – mobile location data, clickstream data, SKUs, pricing…..

Let’s look at a few realworld examples from LinkedIn, CBS Interactive, Explorys and FourSquare. Walt Disney, Wal-mart, General Electric, Nokia, and Bank of America are also applying Hadoop to a variety of tasks including marketing, advertising, and sentiment and risk analysis. IBM used the software as the engine for its Watson computer, which competed with the champions of TV game show Jeopardy.

Hadoop @ LinkedIn

LinkedIn is a massive data hoard whose value is

connections. It currently computes more than 100 billion personalized

recommendations every week, powering an ever growing assortment of products,

including Jobs You May be Interested In, Groups You May Like, News Relevance,

and Ad Targeting.

LinkedIn is a massive data hoard whose value is

connections. It currently computes more than 100 billion personalized

recommendations every week, powering an ever growing assortment of products,

including Jobs You May be Interested In, Groups You May Like, News Relevance,

and Ad Targeting.LinkedIn leverages Hadoop to transform raw data to rich features using knowledge aggregated from LinkedIn’s 125 million member base. LinkedIn then uses Lucene to do real-time recommendations, and also Lucene on Hadoop to bridge offline analysis with user-facing services. The streams of user-generated information, referred to as a “social media feeds”, may contain valuable, real-time information on the LinkedIn member opinions, activities, and mood states.

CBS Interactive - Leveraging Hadoop

CBS Interactive is using Hadoop as the web analytics platform, processing one Billion weblogs daily (grown from 250 million events per day) from hundreds of web site properties.Who is CBS Interactive? They are the online division for CBS, the broadcast network. They are a top 10 global web property and the largest premium online content network. Some of the brands include: CNET, Last.fm, TV.com, CBS Sports, 60 Minutes, to name a few.

CBS Interactive migrated processing from a proprietary platform to Hadoop to crunch web metrics. The goal was to achieve more robustness, fault-tolerance and scalability, and significant reduction of processing time to reach SLA (over six hours reduction so far). To enable this they built an Extraction, Transformation and Loading ETL framework called Lumberjack, built based on python and streaming.

Explorys and Cleveland Clinic

Explorys, founded in 2009 in partnership with the Cleveland Clinic, is one of the largest clinical repositories in the United States with 10 million lives under contract. The Explorys healthcare platform is based upon a massively parallel computing model that enables subscribers to search and analyze patient populations, treatment protocols, and clinical outcomes. With billions of clinical and operational events already curated, Explorys helps healthcare leaders leverage analytics for break-through discovery and the improvement of medicine. HBase and Hadoop are at the center of Explorys. Already ingesting billions of anonymized clinical records, Explorys provides a powerful and HIPAA compliant platform for accelerating discovery.Hadoop @ Orbitz

Travel – air, hotel, car rentals – is an incredibly competitive space. Take the challenge of hotel ranking. Orbitz .com generates ~1.5 million air searches and ~1 million hotel searches a day in 2011. All this activity generates massive amounts of data – over 500 GB/day of log data. The challenge was expensive and difficult to use existing data infrastructure for storing and processing this data.Orbitz needed an infrastructure that provides (1) long term storage of large data sets; (2) open access for developers and business analysts; (3) ad-hoc quering of data and rapid deploying of reporting applications. They moved to Hadoop and Hive to provide reliable and scalable storage and processing of data on inexpensive commodity hardware. Hive is an open-source data warehousing solution built on top of Hadoop which allows easy data summarization, adhoc querying and analysis of large datasets stored in Hadoop. Hive simplifies Hadoop data analysis — users can use SQL rather than writing low level custom code. Highlevel queries are compiled into Hadoop Mapreduce jobs.

Hadoop @ Foursquare

foursquare is a mobile + location + social networking startup aimed at letting your friends in almost every country know where you are and figuring out where they are.As a platform foursquare is now aware of 25+ million venues worldwide, each of which can be described by unique signals about who is coming to these places, when, and for how long. To reward and incent users foursquare allows frequent users to collect points, prize “badges,” and eventually, coupons, for check-ins.

Foursquare is built on enabling better mobile + location + social networking by applying machine learning algorithms to the collective movement patterns of millions of people. The ultimate goal is to build new services which help people better explore and connect with places.

Foursquare engineering employs a variety of machine learning algorithms to distill check-in signals into useful data for app and platform. foursquare is enabled by a social recommendation engine and real-time suggestions based on a person’s social graph.

Matthew Rathbone, foursquare engineering, describes the data analytics challenge as follows:

“With over 500 million check-ins last year and

growing, we log a lot of data. We use that data to do a lot of interesting

analysis, from finding the most popular local bars in any city, to recommending

people you might know. However, until recently, our data was only stored in

production databases and log files. Most of the time this was fine, but whenever

someone non-technical wanted to do some ad-hoc data exploration, it required

them knowing SCALA and being able to query against

production databases.

This has become a larger problem as of late, as

many of our business development managers, venue specialists, and upper

management eggheads need access to the data in order to inform some important

decisions. For example, which venues are fakes or duplicates (so we can delete

them), what areas of the country are drawn to which kinds of venues (so we can

help them promote themselves), and what are the demographics of our users in

Belgium (so we can surface useful information)?”

To enable easy access to data foursquare engineering decided to use Apache

Hadoop, and Apache Hive in combination with a custom

data server (built in Ruby), all running in Amazon

EC2. The data server is built using Rails, MongoDB, Redis, and Resque and communicates with Hive using

the ruby Thrift client.What Data Projects is Hadoop Driving?

Now that we have dispensed with examples and you are eager to get started, what data driven projects do you undertake?Where do you start? Do you do legacy application retrofits to leverage the Hadoop stack? Do you do large scale data center upgrades to handle the terabytes – petabytes – exabytes load? Do you do a Proof of Concept (PoC) project to investigate technologies?

Hadoop and Big Data Vendor Landscape…

Which vendors do you engage with for what. How do you make sense of the landscape?Jeff Kelly @ Wikibon presents a nice Big Data market segmentation landscape graphic that I found quite interesting.

Lots of new entrants in this space that are raising the innovation quotient. According to GigaOM:

“Cloudera which is synonymous with Hadoop has raised $76 million since 2009. Newcomers

MapR and Hortonworks have raised $29 million and $50 million. And

that’s just at the distribution layer, which is the foundation of any Hadoop

deployment. Up the stack, Datameer, Karmasphere and Hadapt have each raised around $10

million, and then are newer funded companies such as Zettaset, Odiago and Platfora. Accel Partners has started a $100 million big data fund to

feed applications utilizing Hadoop and other core big data technologies. If

anything, funding around Hadoop should increase in 2012, or at least cover a lot

more startups.”

Summary

Hadoop usage/penetration is growing as more analysts, programmers and – increasingly – processes “use” data. Accelerating data growth drives performance challenges, load time challenges and hardware cost optimization.The data growth chart below gives you a sense of how quickly we are creating digital data. A few years ago Terabytes were considered a big deal. Now Exabytes are the new Terabytes. Making sense of large data volumes at real-time speed is where we are heading.

- 1000 Kilobytes = 1 Megabyte

- 1000 Megabytes = 1 Gigabyte

- 1000 Gigabytes = 1 Terabyte

- 1000 Terabytes = 1 Petabyte [where most SME corporations are?]

- 1000 Petabytes = 1 Exabyte [where most large corporations are?]

- 1000 Exabytes = 1 Zettabyte [where leaders like Facebook and Google are]

- 1000 Zettabytes = 1 Yottabyte

- 1000 Yottabytes = 1 Brontobyte

- 1000 Brontobytes = 1 Geopbyte

Hadoop’s framework brings a new set of challenges related to the compute infrastructure and underlined network architectures. As Hadoop graduates from pilots to a mission critical component of the enterprise IT infrastructure, integrating information held in Hadoop and in Enterprise RDBMS becomes imperative.

Finally, adoption of Hadoop in the enterprise will not be an easy journey, and the hardest steps are often the first. Then, they get harder! Weaning the IT organizations off traditional DB and EDW models to use a new approach can be compared to moving the moon out of its orbit with a spatula… but it can be done.

2012 may be the year Hadoop crosses into mainstream IT.

Sources and References

1) Hadoop is a Java-based software framework for distributed processing of data-intensive transformations and analyses.Apache Hadoop = HDFS + MapReduce

- Hadoop Distributed File System (HDFS) for storing massive datasets using low-cost storage

- MapReduce, the algorithm on which Google built its empire

2) Related components often deployed with Hadoop – HBase, Hive, Pig, Oozie, Flume and Sqoop. These components form the core Hadoop Stack.

- HBase is an open-source, distributed, versioned, column-oriented store modeled after Google’s BigTable architecture. HBase scales to billions of rows and millions of columns, while ensuring that write and read performance remain constant.

- Hive is a data warehouse infrastructure built on top of Apache Hadoop

- Pig, a high-level query language for large-scale data processing

- ZooKeeper, a toolkit of coordination primitives for building distributed systems

4) It’s important to understand what Hadoop doesn’t do…. Big Data technology like the Hadoop stack does not deliver insight, however – insights depend on analytics that result from combing the results of things like Hadoop MapReduce jobs with manageable “small data” already in the Data warehouse (DW).

5) Foursquare and Hadoop case study writeup by - Matthew Rathbone of the Foursquare Engineering team…. http://engineering.foursquare.com/2011/02/28/how-we-found-the-rudest-cities-in-the-world-analytics-foursquare/

6) Presentation Big Data at FourSquare: http://engineering.foursquare.com/2011/03/24/big-data-foursquare-slides-from-our-recent-talk/

9) Data mining leveraging distributed file systems is a field with multiple techniques. These include: Hadoop, map-reduce; PageRank, topic-sensitive PageRank, spam detection, hubs-and-authorities; similarity search; shingling, minhashing, random hyperplanes, locality-sensitive hashing; analysis of social-network graphs; association rules; dimensionality reduction: UV, SVD, and CUR decompositions; algorithms for very-large-scale mining: clustering, nearest-neighbor search, gradient descent, support-vector machines, classification, and regression; and submodular function optimization.

10) Cloudera distribution for Hadoop (CDH).

| Product | Description |

| HDFS | Hadoop Distributed File System |

| MapReduce | Parallel data-processing framework |

| Hadoop Common | A set of utilities that support the Hadoop subprojects |

| HBase | Hadoop database for random read/write access |

| Hive | SQL-like queries and tables on large datasets |

| Pig | Data flow language and compiler |

| Oozie | Workflow for interdependent Hadoop jobs |

| Sqoop | Integration of databases and data warehouses with Hadoop |

| Flume | Configurable streaming data collection |

| ZooKeeper | Coordination service for distributed applications |

| Hue | User interface framework and software development kit (SDK) for visual Hadoop applications |

History of Hadoop - Interesting how Yahoo! was the home of Hadoop innovation but could not monetize this effectively.

Related articles

- Hadoop on the Rise as Enterprise Developers Tackle Big Data (devx.com)

- Hadoop, Big Data Focus Shifting To Analytics and Visualization (wikibon.org)

- Hadoop spurs Big Data Revolution (Informationweek)

- Understanding Microsoft’s big-picture plans for Hadoop and Project Isotope (zdnet.com)

- Big Data in 2012: Five Predictions (forbes.com)

- Hadoop challenger works to add developers (infoworld.com)

- Hadoop Wars: Cloudera vs. Hortonworks (wikibon.org)

Hadoop, Big Data, and Enterprise Business Intelligence

Many thanks to William Gardella and others for the content below:

Traditional enterprise data warehousing and Hadoop/Big Data are like apples and oranges – the well-known and trusted approach being challenged by a zesty newcomer (sweet oranges were introduced to Europe sometime in the 16th century). Is there room for both? How will these two very different approaches co-exist?

This post is an attempt to summarize the current state of play with Hadoop, “Big Data” and Enterprise BI, and what it means to existing users of enterprise business intelligence. See the list of articles at the end of the post for more detailed materials.

What is Hadoop?

Hadoop is open-source software that enables reliable, scalable, distributed computing on clusters of inexpensive servers. It is:- Reliable: The software is fault tolerant, it expects and handles hardware and software failures

- Scalable: Designed for massive scale of processors, memory, and local attached storage

- Distributed: Handles replication. Offers massively parallel programming model, MapReduce

- HDFS: Hadoop Distributed File System

- HBase: Column oriented, non-relational, schema-less, distributed database modeled after Google’s BigTable. Promises “Random, real-time read/write access to Big Data”

- Hive: Data warehouse system that provides SQL interface. Data structure can be projected ad hoc onto unstructured underlying data

- Pig: A platform for manipulating and analyzing large data sets. High level language for analysts

- ZooKeeper: a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services

Image: William Gardella

Are Companies Adopting Hadoop?

Yes. According to a recent Ventana survey:- More than one-half (54%) of organizations surveyed are using or considering Hadoop for large-scale data processing needs

- More than twice as many Hadoop users report being able to create new products and services and enjoy costs savings beyond those using other platforms; over 82% benefit from faster analyses and better utilization of computing resources

- 87% of Hadoop users are performing or planning new types of analyses with large scale data

- 94% of Hadoop users perform analytics on large volumes of data not possible before; 88% analyze data in greater detail; while 82% can now retain more of their data

- Organizations use Hadoop in particular to work with unstructured data such as logs and event data (63%)

- More than two-thirds of Hadoop users perform advanced analysis — data mining or algorithm development and testing

How is it Being Use in Relation to Traditional BI and EDW?

Currently, Hadoop has carved out a clear niche next to conventional systems. Hadoop is good at handling batch processing of large sets of unstructured data, reliably, and at low cost. It does, however, require scarce engineering expertise, real-time analysis is challenging, and it much less mature than traditional approaches. As a result, Hadoop is not typically being used for analyzing conventional structured data such as transaction data, customer information and call records, where traditional RDBMS tools are still better adapted:“Hadoop is real, but it’s still quite immature. On the “real” side, Hadoop has already been adopted by many companies for extremely scalable analytics in the cloud. On the “immature” side, Hadoop is not ready for broader deployment in enterprise data analytics environments…” James Kobelius, Forrester Research.

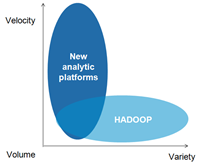

To considerably over-simplify: if we consider what’s

called the 3 ‘V’s of the data challenge: “Volume, Velocity, and Variety” (and

there’s a fourth, Validity), then traditional data warehousing is great at

Volume and Velocity (especially with the new analytic architectures), while

Hadoop is good at Volume and Variety.

To considerably over-simplify: if we consider what’s

called the 3 ‘V’s of the data challenge: “Volume, Velocity, and Variety” (and

there’s a fourth, Validity), then traditional data warehousing is great at

Volume and Velocity (especially with the new analytic architectures), while

Hadoop is good at Volume and Variety.Today, Hadoop is being used as a:

- Staging layer: The most common use of Hadoop in enterprise environments is as “Hadoop ETL” — preprocessing, filtering, and transforming vast quantities of semi-structured and unstructured data for loading into a data warehouse.

- Event analytics layer: large-scale log processing of event data: call records, behavioral analysis, social network analysis, clickstream data, etc.

- Content analytics layer: next-best action, customer experience optimization, social media analytics. MapReduce provides the abstraction layer for integrating content analytics with more traditional forms of advanced analysis.

What Does The Future Look Like?

It’s clear that Hadoop will become a key part of future enterprise data warehouse architectures:“The bottom line is that Hadoop is the future of the cloud EDW, and its footprint in companies’ core EDW architectures is likely to keep growing throughout this decade. “ James Kobelius, Forrester ResearchBut (despite some of the almost religious fervor of its backers) Hadoop is unlikely to supplant the role of traditional data warehouse and business intelligence:

“There are places for the traditional things associated with high-quality, high-reliability data in data warehouses, and then there’s the other thing that gets us to the extreme edge when we want to look at data in the raw form” Yvonne Genovese, Gartner Inc.Companies will continue to use conventional BI for mainstream business users to do ad hoc queries and reports, but they will supplement that effort with a big-data analytics environment optimized to handle a torrent of unstructured data – which, of course, has been part of the goal of enterprise data warehousing for a long time.

Hadoop is particularly useful when:

- Complex information processing is needed

- Unstructured data needs to be turned into structured data

- Queries can’t be reasonably expressed using SQL

- Heavily recursive algorithms

- Complex but parallelizable algorithms needed, such as geo-spatial analysis or genome sequencing

- Machine learning

- Data sets are too large to fit into database RAM, discs, or require too many cores (10’s of TB up to PB)

- Data value does not justify expense of constant real-time availability, such as archives or special interest info, which can be moved to Hadoop and remain available at lower cost

- Results are not needed in real time

- Fault tolerance is critical

- Significant custom coding would be required to handle job scheduling

Does Hadoop and Big Data Solve All Our Data Problems?

Hadoop provides a new, complementary approach to traditional data warehousing that helps deliver on some of the most difficult challenges of enterprise data warehouses. Of course, it’s not a panacea, but by making it easier to gather and analyze data, it may help move the spotlight away from the technology towards the more important limitations on today’s business intelligence efforts: information culture and the limited ability of many people to actually use information to make the right decisions.References / Suggested Reading

- Hadoop Goes Mainstream for Big BI Tasks

- Hadoop: Is It Soup Yet?

- Hadoop: What Is It Good For? Absolutely . . . Something

- Hadoop: What Are These Big Bad Insights That Need All This Nouveau Stuff?

- Hadoop: Future Of Enterprise Data Warehousing? Are You Kidding?

- Hadoop: When Will The Inevitable Backlash Begin?

- Hadoop finds niche alongside conventional database systems

- ‘Big data’ analytics fulfilling the promise of predictive BI

- Big data: The next frontier for innovation, competition, and productivity

Apache Hadoop

Apache Hadoop is a framework for running applications on large cluster built of commodity hardware. The Hadoop framework transparently provides applications both reliability and data motion. Hadoop implements a computational paradigm named Map/Reduce, where the application is divided into many small fragments of work, each of which may be executed or re-executed on any node in the cluster. In addition, it provides a distributed file system (HDFS) that stores data on the compute nodes, providing very high aggregate bandwidth across the cluster. Both MapReduce and the Hadoop Distributed File System are designed so that node failures are automatically handled by the framework.

General Information

- Official Apache Hadoop Website: download, bug-tracking, mailing-lists, etc.

- Overview of Apache Hadoop

- FAQ Frequently Asked Questions.

- Distributions and Commercial Support for Hadoop (RPMs, Debs, AMIs, etc)

- PoweredBy, a growing list of sites and applications powered by Apache Hadoop

- Support

Related-Projects

- HBase, a Bigtable-like structured storage system for Hadoop HDFS

- Apache Pig is a high-level data-flow language and execution framework for parallel computation. It is built on top of Hadoop Core.

- Hive a data warehouse infrastructure which allows sql-like adhoc querying of data (in any format) stored in Hadoop

- ZooKeeper is a high-performance coordination service for distributed applications.

- Hama, a Google's Pregel-like distributed computing framework based on BSP (Bulk Synchronous Parallel) computing techniques for massive scientific computations.

- Mahout, scalable Machine Learning algorithms using Hadoop

User Documentation

- GettingStartedWithHadoop (lots of details and explanation)

- QuickStart (for those who just want it to work now)

- Command Line Options for the Hadoop shell scripts.

- Troubleshooting What do when things go wrong

Setting up a Hadoop Cluster

- HowToConfigure Hadoop software

- Performance: getting extra throughput

- Virtual Clusters including Amazon AWS

- Virtual Hadoop -the theory

- How to set up a Virtual Cluster

- Running Hadoop on AmazonEC2

- Running Hadoop with AmazonS3

Tutorials

- Running_Hadoop_On_Ubuntu_Linux_(Single-Node_Cluster) A tutorial on installing, configuring and running Hadoop on a single Ubuntu Linux machine.

- Hadoop Windows/Eclipse Tutorial: How to develop Hadoop with Eclipse on Windows.

- Yahoo! Hadoop Tutorial: Hadoop setup, HDFS, and MapReduce

MapReduce

The MapReduce algorithm is the foundational algorithm of Hadoop, and is critical to understand.

See The Guardian web site

Apache ZooKeeper can now be found at http://zookeeper.apache.org/

Apache Hive can now be found at http://hive.apache.org/

Pig can now be found at http://pig.apache.org/

Apache Avro can now be found at http://avro.apache.org/

Apache HBase can now be found at http://hbase.apache.org/

See the summary descriptions for all subprojects above. Visit the individual sites for more detailed information.

- Examples

- Benchmarks

Contributed parts of the Hadoop codebase

- These are independent modules that are in the Hadoop codebase but not tightly integrated with the main project -yet.

- HadoopStreaming (Useful for using Hadoop with other programming languages)

- DistributedLucene, a Proposal for a distributed Lucene index in Hadoop

- MountableHDFS, Fuse-DFS & other Tools to mount HDFS as a standard filesystem on Linux (and some other Unix OSs)

- HDFS-APIs in Perl, Python, PHP and other languages.

- Chukwa a data collection, storage, and analysis framework

- The Apache Hadoop Plugin for Eclipse (An Eclipse plug-in that simplifies the creation and deployment of MapReduce programs with an HDFS Administrative feature)

- HDFS-RAID Erasure Coding in HDFS

Developer Documentation

Related Resources

- Nutch Hadoop Tutorial (Useful for understanding Hadoop in an application context)

- IBM MapReduce Tools for Eclipse - Out of date. Use the Eclipse Plugin in the MapReduce/Contrib instead

- Hadoop IRC channel is #hadoop at irc.freenode.net.

- Using Spring and Hadoop (Discussion of possibilities to use Hadoop and Dependency Injection with Spring)

- Univa Grid Engine Integration A blog post about the integration of Hadoop with the Grid Engine successor Univa Grid Engine

- Hadoop Grid Engine Integration Open Grid Scheduler/Grid Engine Hadoop integration setup instructions.

- Hadoop Tutorial Series Learning progressively important core Hadoop concepts with hands-on experiments using the Cloudera Virtual Machine

- Dumbo Dumbo is a project that allows you to easily write and run Hadoop programs in Python.

- Hadoop distributed file system New Hadoop Connector Enables Ultra-Fast Transfer of Data between Hadoop and Aster Data's MPP Data Warehouse.

- HDFS Architecture Documentation An overview of the HDFS architecture, intended for contributors.

Welcome to Apache™ Hadoop™!

- What Is Apache Hadoop?

- Download Hadoop

- Who Uses Hadoop?

- News

- 27 December, 2011: release 1.0.0 available

- March 2011 - Apache Hadoop takes top prize at Media Guardian Innovation Awards

- January 2011 - ZooKeeper Graduates

- September 2010 - Hive and Pig Graduate

- May 2010 - Avro and HBase Graduate

- July 2009 - New Hadoop Subprojects

- March 2009 - ApacheCon EU

- November 2008 - ApacheCon US

- July 2008 - Hadoop Wins Terabyte Sort Benchmark

What Is Apache Hadoop?

The Apache™ Hadoop™ project develops open-source software for reliable, scalable, distributed computing.

The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using a simple programming model. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high-avaiability, the library itself is designed to detect and handle failures at the application layer, so delivering a highly-availabile service on top of a cluster of computers, each of which may be prone to failures.

The project includes these subprojects:

The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using a simple programming model. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high-avaiability, the library itself is designed to detect and handle failures at the application layer, so delivering a highly-availabile service on top of a cluster of computers, each of which may be prone to failures.

The project includes these subprojects:

- Hadoop Common: The common utilities that support the other Hadoop subprojects.

- Hadoop Distributed File System (HDFS™): A distributed file system that provides high-throughput access to application data.

- Hadoop MapReduce: A software framework for distributed processing of large data sets on compute clusters.

- Avro™: A data serialization system.

- Cassandra™: A scalable multi-master database with no single points of failure.

- Chukwa™: A data collection system for managing large distributed systems.

- HBase™: A scalable, distributed database that supports structured data storage for large tables.

- Hive™: A data warehouse infrastructure that provides data summarization and ad hoc querying.

- Mahout™: A Scalable machine learning and data mining library.

- Pig™: A high-level data-flow language and execution framework for parallel computation.

- ZooKeeper™: A high-performance coordination service for distributed applications.

Download Hadoop

Please head to the releases page to download a release of Apache Hadoop.

Who Uses Hadoop?

A wide variety of companies and organizations use Hadoop for both research and production. Users are encouraged to add themselves to the Hadoop PoweredBy wiki page.

News

27 December, 2011: release 1.0.0 available

Hadoop reaches 1.0.0! Full information about this milestone release is available at Hadoop Common Releases.March 2011 - Apache Hadoop takes top prize at Media Guardian Innovation Awards

Described by the judging panel as a "Swiss army knife of the 21st century", Apache Hadoop picked up the innovator of the year award for having the potential to change the face of media innovations.See The Guardian web site

January 2011 - ZooKeeper Graduates

Hadoop's ZooKeeper subproject has graduated to become a top-level Apache project.Apache ZooKeeper can now be found at http://zookeeper.apache.org/

September 2010 - Hive and Pig Graduate

Hadoop's Hive and Pig subprojects have graduated to become top-level Apache projects.Apache Hive can now be found at http://hive.apache.org/

Pig can now be found at http://pig.apache.org/

May 2010 - Avro and HBase Graduate

Hadoop's Avro and HBase subprojects have graduated to become top-level Apache projects.Apache Avro can now be found at http://avro.apache.org/

Apache HBase can now be found at http://hbase.apache.org/

July 2009 - New Hadoop Subprojects

Hadoop is getting bigger!- Hadoop Core is renamed Hadoop Common.

- MapReduce and the Hadoop Distributed File System (HDFS) are now separate subprojects.

- Avro and Chukwa are new Hadoop subprojects.

See the summary descriptions for all subprojects above. Visit the individual sites for more detailed information.